Reading notes: Federated Learning with Only Positive Labels

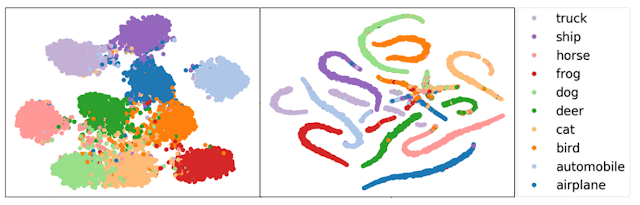

This blog is the reading note for the paper "Federated Learning with Only Positive Labels" by Yu, Felix X., et al. ICML 2020. Broadly speaking, the authors consider learning a multi-class classification model in the federated setting, where each user has access to the positive data associated with only a single class. Specifically, they propose a generic framework namely Federated Averaging with Spreadour (FedAwS), where the server imposes a geometric regularizer after each round to encourage classes to spread-out in the embedding space. They show that FedAwS can almost match the performance of conventional learning both theoretically and empirically. Introduction In this work, the authors consider learning a classification model in the federated learning setup, where each user has only access to a single class. Examples of such settings include decentralized training of face recognition models or speaker identification models, where the classifier of the users has sensitive ...