Reading notes: Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation

This blog is the reading note for the paper "Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation" by Uktku Ozbulak, et al., MICCAI 2019. Broadly speaking, the authors try to generate more realistic adversarial attacks on medical image segmentation tasks. Specifically, they design a very novel generation method to produce an adversarial example that misleads the trained model to predict a targeted realistic prediction mask with limited perturbation.

Introduction

Recent studies have developed various deep learning-based models in medical imaging systems. They have shown great performance over some clinical prediction tasks with minimum labor expenses. However, deep learning-based models come with a major security problem: they can be totally fooled by so-called adversarial examples. Adversarial examples are inputs of the model with imperceptibly small perturbation that lead to misclassification. Consequently, the users of these systems will be exposed to unforeseen hazardous situations such as diagnostic error, insurance fraud and so on.

Although the effect of adversarial examples are largely studied for non-medical datasets in classification tasks, it is only recently that studies started to investigate their influence on segmentation problems. The current attack algorithms aim to force the model to segment all pixels wrong, which are not realistic in the medical image domain and can be easily detected by human eyes.

In this study, the authors demonstrate a novel targeted adversarial attack generation method that is tailored for medical image segmentation systems.

Framework

Here are two major differences between classification and segmentation in terms of adversarial example generation:

1. In classification, the adversarial target is often a signal class. However, in segmentation, the target is a mask, thus the goal is to change the prediction of a large number of labels instead of a signal one.

2. When generating the perturbation for segmentation, the gradient information is from a large number of sources (pixels), it is hard to tune the perturbation learning rate.

Static Segmentation Mask (SSM)

To generate a targeted segmentation attack, there are two goals: (1) increase the prediction likelihood of the selected foreground pixels in the target adversarial mask, while (2) decrease the prediction likelihood of all other pixels that are not in the same mask. To achieve this property a straight forward formula will be:

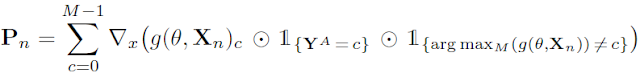

P is the perturbation, g is the prediction, M is the number of classes (if only foreground and background, M=2). X is the input image and Y is the targeted mask.

Adaptive Segmentation Mask (ASM)

As mentioned in (1), the challenge of generating an attack for the segmentation task is that the gradient is sourced from a large number of pixels (entire image for SSM). However, during generation, the prediction of many pixels may already be correct, and will not require any further optimization. To improve the efficiency of the algorithm, The authors add an adaptive mask during optimization, which can ensure the gradient is the only source from the pixel whos label is wrong from target adversarial mask at each iteration. The equation is shown as follows:

Dynamic Perturbation Multiplier (DPM)

Then for the challenge (2), it hard to find a constant learning rate for all different pixels during the optimization. For example, a larger value will increase the perturbation to be detected by a human while a small value will lead the optimization to halt. Therefore the authors employ a dynamic perturbation strategy in their approach. It will increase the learning rate during optimization since the left pixels are relatively "hard" examples to be modified. The equation is as follow:

Finally, the authors name their approach as Adaptive Segmentation Mask Attack (ASMA).

Experiments

Table 1 present the quantitative result of the proposed algorithm for adversarial example generation. They use Glaucoma and ISIC Skin Lesion Datasets for evaluation. It shows the distortion of perturbation in term of L-norm distances as well as the performance in terms of intersection over union (IoU) and pixel accuracy (PA). It demonstrates that their proposed algorithm has the best performance among all others.

My Opinion

Overall, I think this paper is of good quality. Motivation is clearly explained in the medical domain: a realistic target mask is required for medical segmentation attacks and multiple targets (each pixel has a label) need to be considered during attacking. The evolution from SMM to their method ASMA is clearly explained and the effectiveness of these changes is supported by their experiment. Finally, The idea is novel. to the best of my knowledge, generating a targeted attack is new in medical segmentation and even new in the natural image segmentation.

However, I think there are some points would be addressed. 1. I checked the source code, instead of only using the loss mentioned before, authors also use a distance loss between the prediction and target mask. I wonder how much does this loss contribute to the result. 2. I would like to see its effectiveness against some defense methods (such as adversarial training).

Reference

[1] Ozbulak, Utku, Arnout Van Messem, and Wesley De Neve. "Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation." International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham, 2019.

Introduction

Recent studies have developed various deep learning-based models in medical imaging systems. They have shown great performance over some clinical prediction tasks with minimum labor expenses. However, deep learning-based models come with a major security problem: they can be totally fooled by so-called adversarial examples. Adversarial examples are inputs of the model with imperceptibly small perturbation that lead to misclassification. Consequently, the users of these systems will be exposed to unforeseen hazardous situations such as diagnostic error, insurance fraud and so on.

Although the effect of adversarial examples are largely studied for non-medical datasets in classification tasks, it is only recently that studies started to investigate their influence on segmentation problems. The current attack algorithms aim to force the model to segment all pixels wrong, which are not realistic in the medical image domain and can be easily detected by human eyes.

In this study, the authors demonstrate a novel targeted adversarial attack generation method that is tailored for medical image segmentation systems.

Framework

Here are two major differences between classification and segmentation in terms of adversarial example generation:

1. In classification, the adversarial target is often a signal class. However, in segmentation, the target is a mask, thus the goal is to change the prediction of a large number of labels instead of a signal one.

2. When generating the perturbation for segmentation, the gradient information is from a large number of sources (pixels), it is hard to tune the perturbation learning rate.

Static Segmentation Mask (SSM)

To generate a targeted segmentation attack, there are two goals: (1) increase the prediction likelihood of the selected foreground pixels in the target adversarial mask, while (2) decrease the prediction likelihood of all other pixels that are not in the same mask. To achieve this property a straight forward formula will be:

Adaptive Segmentation Mask (ASM)

As mentioned in (1), the challenge of generating an attack for the segmentation task is that the gradient is sourced from a large number of pixels (entire image for SSM). However, during generation, the prediction of many pixels may already be correct, and will not require any further optimization. To improve the efficiency of the algorithm, The authors add an adaptive mask during optimization, which can ensure the gradient is the only source from the pixel whos label is wrong from target adversarial mask at each iteration. The equation is shown as follows:

Dynamic Perturbation Multiplier (DPM)

Then for the challenge (2), it hard to find a constant learning rate for all different pixels during the optimization. For example, a larger value will increase the perturbation to be detected by a human while a small value will lead the optimization to halt. Therefore the authors employ a dynamic perturbation strategy in their approach. It will increase the learning rate during optimization since the left pixels are relatively "hard" examples to be modified. The equation is as follow:

Finally, the authors name their approach as Adaptive Segmentation Mask Attack (ASMA).

Experiments

|

| Table 1 Experimental results. [1] |

Table 1 present the quantitative result of the proposed algorithm for adversarial example generation. They use Glaucoma and ISIC Skin Lesion Datasets for evaluation. It shows the distortion of perturbation in term of L-norm distances as well as the performance in terms of intersection over union (IoU) and pixel accuracy (PA). It demonstrates that their proposed algorithm has the best performance among all others.

My Opinion

Overall, I think this paper is of good quality. Motivation is clearly explained in the medical domain: a realistic target mask is required for medical segmentation attacks and multiple targets (each pixel has a label) need to be considered during attacking. The evolution from SMM to their method ASMA is clearly explained and the effectiveness of these changes is supported by their experiment. Finally, The idea is novel. to the best of my knowledge, generating a targeted attack is new in medical segmentation and even new in the natural image segmentation.

However, I think there are some points would be addressed. 1. I checked the source code, instead of only using the loss mentioned before, authors also use a distance loss between the prediction and target mask. I wonder how much does this loss contribute to the result. 2. I would like to see its effectiveness against some defense methods (such as adversarial training).

Reference

[1] Ozbulak, Utku, Arnout Van Messem, and Wesley De Neve. "Impact of Adversarial Examples on Deep Learning Models for Biomedical Image Segmentation." International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer, Cham, 2019.

hi was just seeing if you minded a comment. i like your website and the thme you picked is super. I will be back. slot online

ReplyDeletePositive site, where did u come up with the information on this posting?I have read a few of the articles on your website now, and I really like your style. Thanks a million and please keep up the effective work. Judi slot online

ReplyDeleteThat's end result of|as a end result of} it really does require ability to play, whereas most machines are based completely on luck. However, only decide this machine if perceive how|you know the way} to play properly. Machines that supply lots of large prizes tend to to|are inclined to} pay much less often, whereas machines with smaller prizes tend to to|are inclined to} pay 로스트아크 out more regularly. The larger the jackpot, the tougher it's to hit, so that you're better off selecting a machine with a smaller jackpot. The particulars talked about in our evaluate may be be} subject to change as casinos search to enhance their websites to offer their patrons a greater playing expertise.

ReplyDelete