Reading notes: Adversarial Examples Are Not Bugs, They Are Features

Source: Ilyas, Andrew, et al. "Adversarial examples are not bugs, they are features." arXiv preprint arXiv:1905.02175 (2019). https://arxiv.org/abs/1905.02175

Experiments

Introduction

Over the past few years, the adversarial attacks - which aim to force the machine learning systems to make misclassification by adding slightly perturbation - have received signification attention in the community. There are a lot of works show that intentional perturbation which is imperceptible to human can easily fool a deep learning classifier. In response to the threat, there has been much work on defensive techniques that help models against adversarial examples. But none of them really answer the fundamental question: Why do these adversarial attacks arise?

By far, some previous research works view the adversarial examples as aberrations come from the high dimensional nature of the input space that will eventually disappear when we have enough training dataset or better training algorithms. Different from this point of view, a group of MIT researchers proposes a new perspective on the adversarial examples in this paper:

"Adversarial vulnerability is a direct result of our models’ sensitivity to well-generalizing features in the data."

The researchers suggest that the classifiers are often trained to maximize accuracy solely without much prior context about the human-related concept on classes. Inevitable, classifiers would use any available signals to deliver the result even some signals are incomprehensible to humans or likely be ignored by humans. For example, it is more natural to use "eyes" or "ears" than to use "fur" to distinguish dog and cat as shown in the following figure. They posit that the model relies on those "non-robust" features, leads to adversarial perturbations.

|

| Cat or Dog? |

Experiments

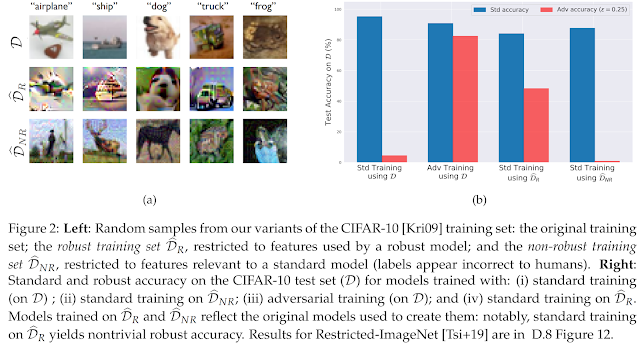

To support this theory, they show that it is possible to disentangle robust and non-robust features in some standard image dataset. A conceptual description of the experiments is shown in Figure 1. Given a training dataset, they can construct a "robustified" dataset, in which samples primarily contain robust features. By training on this dataset, the model should have good robust accuracy (Figure 1(a)). On the other hand, they construct a dataset with images that attacked to predict a wrong label. They use the perturbed images paired with a misclassified label to build a new dataset. They show that this dataset suffices to train a classifier with good performance on the standard test set (Figure 2(b)). This indicates that non-robust features are indeed valuable features.

Results

Figure 2 shows the experiment results. By restricting the dataset to only robust feature, the standard training results in good accuracy in both standard and adversarial setting. While training on "non-robust" dataset, the model is vulnerable to adversarial. Overall, the findings corroborate the hypothesis that adversarial examples arise from non-robust features of data itself.

The standard training on the "mislabeled" dataset actually generalize to the original test set, as shown in Table 1, which indicates that non-robust feature is useful for classification in the standard-setting.

The standard training on the "mislabeled" dataset actually generalize to the original test set, as shown in Table 1, which indicates that non-robust feature is useful for classification in the standard-setting.

The experiment establishes adversarial vulnerability as a purely human-centric phenomenon, since, from the supervised learning point of view, non-robust features are as important as robust features. For the future, if we want a model to be human-specified robustness, we need to incorporate priors into architecture or training process.

More in the Paper

In the paper, the authors also provide a precise framework for discussing the robust and non-robust feature, more experiments result and a theoretical model for studying these features.

Results

Figure 2 shows the experiment results. By restricting the dataset to only robust feature, the standard training results in good accuracy in both standard and adversarial setting. While training on "non-robust" dataset, the model is vulnerable to adversarial. Overall, the findings corroborate the hypothesis that adversarial examples arise from non-robust features of data itself.

The standard training on the "mislabeled" dataset actually generalize to the original test set, as shown in Table 1, which indicates that non-robust feature is useful for classification in the standard-setting.

The standard training on the "mislabeled" dataset actually generalize to the original test set, as shown in Table 1, which indicates that non-robust feature is useful for classification in the standard-setting.The experiment establishes adversarial vulnerability as a purely human-centric phenomenon, since, from the supervised learning point of view, non-robust features are as important as robust features. For the future, if we want a model to be human-specified robustness, we need to incorporate priors into architecture or training process.

More in the Paper

In the paper, the authors also provide a precise framework for discussing the robust and non-robust feature, more experiments result and a theoretical model for studying these features.

Comments

Post a Comment